Showing posts with label machine learning. Show all posts

Showing posts with label machine learning. Show all posts

Tuesday, January 3, 2023

Saturday, December 3, 2022

NeurIPS 2022 Skidoo!

Caption this! Said to be from Lecun session…

LLM Awakens - Prof. Chalmers discussed "Could a large language model be conscious?" at the Nov. 28th opening keynote of the 36th annual Neural Information Processing Systems conference, commonly known as NeurIPS, in New Orleans Read it. ZDNet

Dont lose the compass - “Mortal computation" means analog computers marrying AI closely to hardware will put GPT-3 in your toaster on the cheap. Read it. ZDNet

Tuesday, November 1, 2022

Tuesday, December 17, 2019

The C word - and more

Song Han and Yoshua Bengio:

Y.B>: The C-word, consciousness, has been a bit of a taboo in many scientific communities. But in the last couple of decades, the neuroscientists, and cognitive scientists have made quite a bit of progress in starting to pin down what consciousness is about. And of course, there are different aspects to it. There are several interesting theories like the global workspace theory. And now I think we are at a stage where machine learning, especially deep learning, can start looking into neural net architectures and objective functions and frameworks that can achieve some of these functionalities. And what's most exciting for me is that these functionalities may provide evolutionary advantages to humans and thus if we understand those functionalities they would also be helpful for AI.

Related -

Full transcript

Global workspace theory

Monday, August 19, 2019

Shores of ML

Limits of ML? - I noticed this last week, when looking for Barnum's Bio, that a chap had re-published it and attached his patent. Writer David Streitfield here investigates the rape of Orwell's work.

"Amazon said in a statement that “there is no single source of truth” for the copyright status of every book in every country, and so it relied on authors and publishers to police its site. The company added that machine learning and artificial intelligence were ineffective when there is no single source of truth from which the model can learn." Really? 1984? https://nyti.ms/33KyQ6v

"Amazon said in a statement that “there is no single source of truth” for the copyright status of every book in every country, and so it relied on authors and publishers to police its site. The company added that machine learning and artificial intelligence were ineffective when there is no single source of truth from which the model can learn." Really? 1984? https://nyti.ms/33KyQ6v

Wednesday, July 31, 2019

Throwing Optane on Spark

Accelerate Your Apache Spark with Intel Optane DC Persistent Memory from Databricks

Spark is in-memory, cool! But that also brings with it issues. Intel sees it as a use case for its new Optane feast and fowl memory.

Spark is in-memory, cool! But that also brings with it issues. Intel sees it as a use case for its new Optane feast and fowl memory.

Tuesday, July 30, 2019

Latent ODEs for Irregularly-Sampled Time Series

"Latent ODEs for Irregularly-Sampled Time Series"

Time series with non-uniform intervals occur in many applications, and are difficult to model using standard recurrent neural networks (RNNs). These guys generalized with a model called ODE-RNNs. Paper by Rubanova et al.: arxiv.org/abs/1907.03907

Friday, September 28, 2018

Name that tune, Now Playing!

RELATED

https://ai.googleblog.com/2018/09/googles-next-generation-music.html - Google AI Blog, Sept 14, 2018

Speaking of Name That Tune – why not a little vignette from

the time when Humans Walked the Earth?

Monday, August 20, 2018

How well can neurals generalize across hospitals?

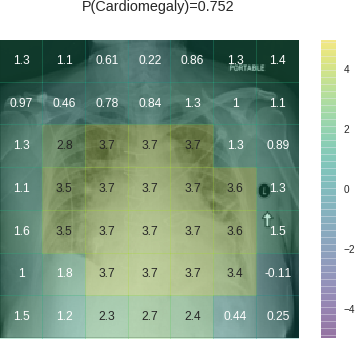

Which features in any quantity influence a convolutional neural network’s (CNN’s) decision? To find the answer in radiology, work is needed, writes researcher John Zech on Medium. The matter gains increased importance as researchers look to ‘go big’ with their data, and to create models based on X-rays obtained from different hospitals.

Before tools are used to crunch big data for actual diagnosis "we must verify their ability to generalize across a variety of hospital systems" writes Zech.

Among findings:

that pneumonia screening CNNs trained with data from a single hospital system did generalize to other hospitals, though in 2 / 4 cases their performance was significantly worse than their performance on new data from the hospital where they were trained.

he goes further:

CNNs appear to exploit information beyond specific disease-related imaging findings on x-rays to calibrate their disease predictions. They look at parts of the image that shouldn’t matter (outside the heart for cardiomegaly, outside the lungs for pneumonia). Initial data exploration suggests they appear rely on these more for certain diagnoses (pneumonia) than others (cardiomegaly), likely because the disease-specific imaging findings are harder for them to identify.

These findings come against a backdrop: An early target for IBM’s Watson cognitive software has been radiology diagnostics. Recent reports question the efficacy thereof. Zech and collaborators’ work shows another wrinkle on the issue, and the complexity that may test estimates of early success for deep learning in this domain. - Vaughan

Related

https://arxiv.org/abs/1807.00431

https://medium.com/@jrzech/what-are-radiological-deep-learning-models-actually-learning-f97a546c5b98

https://en.wikipedia.org/wiki/Convolutional_neural_network

https://www.clinical-innovation.com/topics/artificial-intelligence/new-report-questions-watsons-cancer-treatment-recommendations

Sunday, August 19, 2018

DeepMind AI eyes ophthalmological test breakthrough

|

| Eye ball to eye ball with DeepMind. |

DeepMind, the brainy bunch of British boffins whom Google pickedup to carry forward the AI torch, has reported in a scientific journal that it succeeded in employing a common ophthalmological tests to screen for many health disorders.

So reports Bloomberg.

DeepMind’s software used two separate neural networks, a kind of machine learning loosely based on how the human brain works. One neural network labels features in OCT images associated with eye diseases, while the other diagnoses eye conditions based on these features.

Splitting the task means that -- unlike an individual network that makes diagnoses directly from medical imagery – DeepMind’s AI isn’t a black box whose decision-making rationale is completely opaque to human doctors, [a principal said].

The group, which encountered controversy over its use of patient data in the past, said it has cleared important hurdles and hopes to move to clinical tests in 2019.

Related

https://www.bloomberg.com/news/articles/2018-08-13/google-s-deepmind-to-create-product-to-spot-sight-threatening-disease

Monday, February 19, 2018

Cybernetic Sutra

I'd had an opportunity in college days to study comparative world press under professor Lawrence Martin Bittman, who introduced BU journalism students to the world of disinformation, a discipline he'd learned first hand in the 1960s, before his defection to the West, as a head of Czech Intelligence. We got a view into the information wars within the Cold War. This gave me a more nuanced view of the news than I might otherwise have known. Here I am going to make a jump.

I'd begun a life-long dance with the news.

I'd also begun a life-long study of cybernetics.

And lately the two interests have begun oddly to blend.

It was all on the back of Really Simple Syndication -RSS- and its ability to feed humongous quantities of online content in computer-ready form-It made me a publisher, as able as Gutenberg, and my brother a publisher, and my brother-in-law a publisher, and on ...

Cybernetics was a promising field of science that seemed ultimately to fizzle. After World War II, led by M.I.T.'s Norbert Wiener and others, cybernetics arose as, in Wiener's words, "the scientific study of control and communication in the animal and the machine."

It burst rather as a movement upon the mass consciousness at a time when fear of technology and the dehumanization of science were a growing concern. - As the shroud of war time secrecy dispersed, in 1948 penned Cybernetics, which was followed by a popularization.

Control, communication, feedback, regulation. It took its name for the Greek root cyber. Wiener - Brownian motion - artillery tables - development of the thermostat, autopilot, differential analyzer, radar, neural networks, back propagation.

Cybernetics flamed out in a few years, tho made an peculiar reentry in the era of the WWW. Flamed out but, somewhat oddly, continued as an operational style in the USSR for quite some time more. Control, communication, feedback, regulation played out there somewhat differently.

A proposal for a Soviet Institute of Cybernetics included "the subjects of logic, control, statistics, information theory, semiotics, machine translation, economics, game theory, biology, and computer programming."1 It came back to mate with cybernetics on the web in the combination of agitprop and social media, known as Russian meddling, that slightly tipped the scales, arguably, of American politics.

1 http://web.mit.edu/slava/homepage/reviews/review-control.pdf

I'd begun a life-long dance with the news.

I'd also begun a life-long study of cybernetics.

And lately the two interests have begun oddly to blend.

It was all on the back of Really Simple Syndication -RSS- and its ability to feed humongous quantities of online content in computer-ready form-It made me a publisher, as able as Gutenberg, and my brother a publisher, and my brother-in-law a publisher, and on ...

Cybernetics was a promising field of science that seemed ultimately to fizzle. After World War II, led by M.I.T.'s Norbert Wiener and others, cybernetics arose as, in Wiener's words, "the scientific study of control and communication in the animal and the machine."

It burst rather as a movement upon the mass consciousness at a time when fear of technology and the dehumanization of science were a growing concern. - As the shroud of war time secrecy dispersed, in 1948 penned Cybernetics, which was followed by a popularization.

Control, communication, feedback, regulation. It took its name for the Greek root cyber. Wiener - Brownian motion - artillery tables - development of the thermostat, autopilot, differential analyzer, radar, neural networks, back propagation.

Cybernetics flamed out in a few years, tho made an peculiar reentry in the era of the WWW. Flamed out but, somewhat oddly, continued as an operational style in the USSR for quite some time more. Control, communication, feedback, regulation played out there somewhat differently.

A proposal for a Soviet Institute of Cybernetics included "the subjects of logic, control, statistics, information theory, semiotics, machine translation, economics, game theory, biology, and computer programming."1 It came back to mate with cybernetics on the web in the combination of agitprop and social media, known as Russian meddling, that slightly tipped the scales, arguably, of American politics.

1 http://web.mit.edu/slava/homepage/reviews/review-control.pdf

Sunday, January 21, 2018

AI drive spawns new takes on chip design

|

| As soon as we solve machine learning we will fix printer. |

The driver these days is A.I. but more particularly the machine learning aspect of AI. GPUs jumped out of the gamer console and onto the Google and Facebook data center. But there was more in the way of hardware tricks to come. The effort is to get around the tableau I here repeatedly cited: the scene is the data scientist sitting there thumb twiddling while the neural machine slowly does its learning.

I know when I saw that Google had created a custom ASIC for Tensor Flow processing, I was taken aback. If new chips are what is needed to succeed in this racket, it will be a rich man's game.

Turns out a slew of startups are on the case. This article by Cade Metz suggests that at least 45 startups are working on chips for AI type applications such as speech recognition and self-driving cars. It seems the Nvidia GPU that has gotten us to where we are, may not be enough going forward. Co processors for co processors, chips that shuttle data about in I/O roles for GPUs, may be the next frontier.

Metz names a number of AI chip startups: Cerbras, Graphcore, Wave Computing, Mythic, Nervana (now part of Intel). - Jack Vaughan

Related

https://www.nytimes.com/2018/01/14/technology/artificial-intelligence-chip-start-ups.html

Sunday, September 3, 2017

Forensic analytics

While at Bell Labs in the 1980s, Dalal said, he worked with a team that looked back on the 1986 Challenger space shuttle disaster to find out if the event could have been predicted. It is well-known that engineering teams held a tense teleconference the night before the launch to review data that measured risk. Ultimately, a go was ordered, even though Cape Canaveral, Fla., temperatures were much lower than in any previous shuttle flight. A recent article looks at the issues with an eye on how they are related to analytics today.

http://searchdatamanagement.techtarget.com/opinion/Making-connections-Big-data-algorithms-walk-a-thin-line

http://searchdatamanagement.techtarget.com/opinion/Making-connections-Big-data-algorithms-walk-a-thin-line

Tuesday, May 23, 2017

Partners HealthCare: "I sing the General Electric"

Massachusetts-based Partners HealthCare partnered with GE Healthcare last week on a projected 10-year collaboration to bring greater use of AI-based deep learning technology to healthcare. across the entire continuum of care. The collaboration will be executed through the newly formed Massachusetts General Hospital and Brigham and Women’s Hospital Center for Clinical Data Science and will feature co-located, multidisciplinary teams with broad access to data, computational infrastructure and clinical expertise.

The deal is something of a stake in the ground, as GE moves its HQ up from Conn to Boston. In the long term, Partners and GE hope to create new businesses around AI and healthcare.

The initial focus of the relationship will be on the development of applications aimed to improve clinician productivity and patient outcomes in diagnostic imaging. It will be interesting, as more details emerge, to see how this effort compares or contrasts with efforts such as IBM Watson Imaging Clinical Review -- a cognitive imaging offering from that company's Watson Health operation as part of a collaborative of 24 organizations worldwide. - Smiling Jack Shroud

http://www.partners.org/Newsroom/Press-Releases/Partners-GE-Healthcare-Collaboration.aspx

http://www-03.ibm.com/press/us/en/pressrelease/51643.wss

The deal is something of a stake in the ground, as GE moves its HQ up from Conn to Boston. In the long term, Partners and GE hope to create new businesses around AI and healthcare.

The initial focus of the relationship will be on the development of applications aimed to improve clinician productivity and patient outcomes in diagnostic imaging. It will be interesting, as more details emerge, to see how this effort compares or contrasts with efforts such as IBM Watson Imaging Clinical Review -- a cognitive imaging offering from that company's Watson Health operation as part of a collaborative of 24 organizations worldwide. - Smiling Jack Shroud

http://www.partners.org/Newsroom/Press-Releases/Partners-GE-Healthcare-Collaboration.aspx

http://www-03.ibm.com/press/us/en/pressrelease/51643.wss

Wednesday, April 12, 2017

The road to machine learning

In some spare time last year I worked on reworking a story I'd written for TechTarget on the topic of machine learning and related data infrastructure. I spoke with a few users in the story, so the reworking focuses on their end-use applications, while also covering vendors and technology employed. I did this as a Sway multimedia presentation. I faltered in final completion, in that an audio track was the proverbial bridge too far. So I call it a failure (experience tells us to jump from projects that are "projects of one" but thought it wa worth posting here, for experimental purposes. To read the story it is based on please go to "Machine Learning tools pose educational challenges for users." - Jack Vaughan

Subscribe to:

Posts (Atom)