Which features in any quantity influence a convolutional neural network’s (CNN’s) decision? To find the answer in radiology, work is needed, writes researcher John Zech on Medium. The matter gains increased importance as researchers look to ‘go big’ with their data, and to create models based on X-rays obtained from different hospitals.

Before tools are used to crunch big data for actual diagnosis "we must verify their ability to generalize across a variety of hospital systems" writes Zech.

Among findings:

that pneumonia screening CNNs trained with data from a single hospital system did generalize to other hospitals, though in 2 / 4 cases their performance was significantly worse than their performance on new data from the hospital where they were trained.

he goes further:

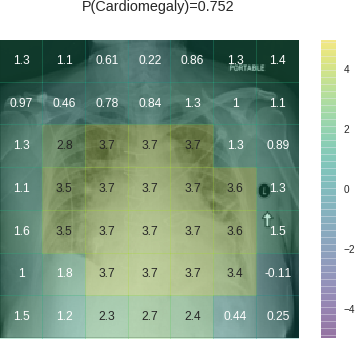

CNNs appear to exploit information beyond specific disease-related imaging findings on x-rays to calibrate their disease predictions. They look at parts of the image that shouldn’t matter (outside the heart for cardiomegaly, outside the lungs for pneumonia). Initial data exploration suggests they appear rely on these more for certain diagnoses (pneumonia) than others (cardiomegaly), likely because the disease-specific imaging findings are harder for them to identify.

These findings come against a backdrop: An early target for IBM’s Watson cognitive software has been radiology diagnostics. Recent reports question the efficacy thereof. Zech and collaborators’ work shows another wrinkle on the issue, and the complexity that may test estimates of early success for deep learning in this domain. - Vaughan

Related

https://arxiv.org/abs/1807.00431

https://medium.com/@jrzech/what-are-radiological-deep-learning-models-actually-learning-f97a546c5b98

https://en.wikipedia.org/wiki/Convolutional_neural_network

https://www.clinical-innovation.com/topics/artificial-intelligence/new-report-questions-watsons-cancer-treatment-recommendations

No comments:

Post a Comment