Thursday, July 23, 2015

Build, Ignite, Azure

In the Spring, Microsoft CEO Satya Nadella talked about Microsoft's enduring mission to make technology available to the masses. It is an assertion that has some grounding, but it is hard to speculate whether Microsoft can find that kind of magic again. This podcast is a recap of Ignite 2015 and Build 2015 SQL Server and Azure announcements that look to move the traditional mission forward. Can Microsoft steal a march on Amazon Web Services? Well the matter is open for discussion. Some background: this former Webmaster used Front Page to bring an organization kicking and screaming into the Web era, and the Microsoft tool ($99) played a big part. Click to download podcast.

Wednesday, July 22, 2015

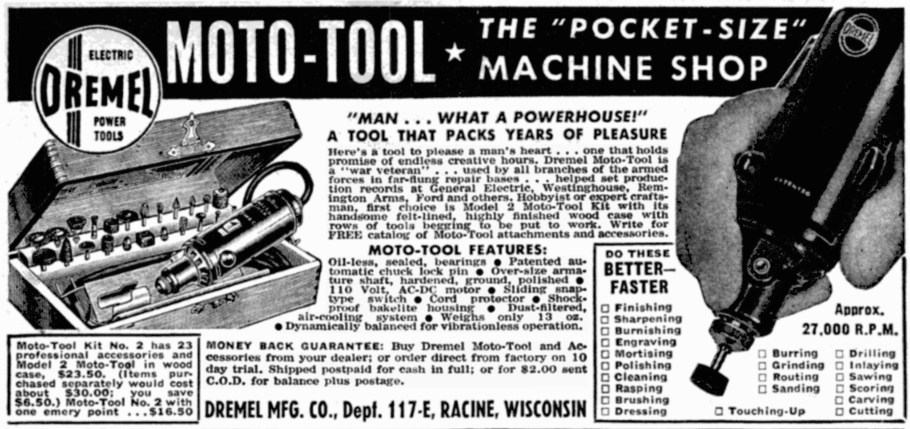

Dremel drill doodling

Think of SQL tools that let the large numbers of ''SQL-able'' people ask questions of data. If the developers can build the tools, they can enable other people to do the work, and get on with the job of building more tools. And we are back on the usual track of technology history.

Big Web search company Google created Dremel as a complement to, not a replacement for, MapReduce, to enable at-scale interactive analysis of crawled web documents, tracking of install data for applications on the Android Market site, crash reporting for Google products, spam analysis and much more. It brought some SQLability to MapReduce.

For a Wisconsin Badger like me it does not go without saying that the Google project takes its name from the Dremel Moto-Tool from the Dremel Co. of Racine, Wisconsin. That company, beginning in the 1930s, was among the region's pioneers in small electric motors – not data engines, but engines of progress nonetheless. - Jack Vaughan

Big Web search company Google created Dremel as a complement to, not a replacement for, MapReduce, to enable at-scale interactive analysis of crawled web documents, tracking of install data for applications on the Android Market site, crash reporting for Google products, spam analysis and much more. It brought some SQLability to MapReduce.

For a Wisconsin Badger like me it does not go without saying that the Google project takes its name from the Dremel Moto-Tool from the Dremel Co. of Racine, Wisconsin. That company, beginning in the 1930s, was among the region's pioneers in small electric motors – not data engines, but engines of progress nonetheless. - Jack Vaughan

Sunday, July 19, 2015

Holes in Mass. Halo: Sitting on Public Records.

When I was a young reporter it was very challenging to get information out of the State of Massachusetts or City of Boston. It was like a scene from Citizen Kane. The archives were dark and closed. You had to go through conniptions, do leg work. Later, when chance led me to teach Computer Assisted Reporting at N.U., research uncovered some pretty good availability for different types of records on the Web. It seemed like it was something of a flowering. But apparently it was a false bloom. Massachusetts has gained renown as a liberal bastion – the state spearheaded abolition, voted for McGovern, legalize gay marriage. But for some reason or other it has become a less than liberal fortress when it comes to public records. The statehouse, judiciary and governor's office all claim immunity from records retrieval, as depicted in today's Boston Globe p1 story : "Mass. Public Records Often a Close Book." It has a great lead-in where a lawyer doing researcher on breathalyzers explains that there are states that share such databases for free, states ( Wisconsin) that charge $75, and Massachusetts, where the State Police came up with a $2.7-million tag to share the data. [When pressed they admitted that they had incorrectly estimated the cost – it should have been $1.2 million.] A cast comes through to criticize the situation: Thomas Fieldler of BU's College of Communication; Matthew Segal, of the ACLU, Robert Ambrogi, attorney and exec-director of the Mass newspaper Publishers Assn; Katie Townsend of the Reporters Committee for Freedom of the Press an others. An interesting decrier of bills ("costly new unfunded mandates") aimed at fixing the situation is the Massachusetts Municipal Assn. Just as interesting is the cameo article appearance of Sec. of State William "What Me Worry?" Galvin, whose office is charge with helping oversee public records, who has no more to say than that "a type of bureaucratic fiefdom" has built up over the years. Maybe the lottery has diverted that dept's attention, and the responsibility for fleecing the poor should be handed over to the Attorney General, another less than bold piece of furniture. With the three previous House Speakers being convicted of felonies, everyone has been pretty busy, keeping a lid on data. - Jack Vaughan

Saturday, June 27, 2015

Machine and learning, trial and error

|

| His Master's Voice (HMV) |

The thing I picked up from Celma's presentation was that you can only get so far with your basic breed of suggestion engine. In the radio days a big voice intoned 'don’t touch that dial'. Now something else is in order.

You see, if you play the straight and narrow and give them what you know they want, they get bored, and tune out. The element of surprise has been intrinsic to good showmanship immemorial . The machines can get better and better, but at a slower and slower rate. People eventually want to come across a crazed Jack Black pushing the 13th Floor Elevator button in HiFidelity.

The machines have trouble contemplating the likelihood that a viewer may be prone to enjoy Napoleon Dynamite, as was précised in this story about the 2008 NYTimes Magazine story about the Netflix algorithm contest that fate (my brother cleaning the upstairs) cast upon my stoop.

TO BE CONTINuED

Sunday, June 21, 2015

Momentous tweets for a week in June 2015

The government's plan to regulate facial recognition tech is falling apart http://t.co/O6Tz7puFB1

— Jack Vaughan (@JackIVaughan) June 20, 2015

Nature and Viz http://t.co/WbW1p4TGtU via @DataRemixed

— Jack Vaughan at TT (@JackVaughanatTT) June 18, 2015

Machine-learning algorithm can predict next line in rap songs w 82% accuracy. Cure for cancer still in future. http://t.co/f13Bzilwkh

— Jack Vaughan at TT (@JackVaughanatTT) June 18, 2015

— Jack Vaughan at TT (@JackVaughanatTT) June 16, 2015

Sunday, June 14, 2015

Advance and quandry: Big Data and veteran's health

The era of big data continues to present big quandaries. A Time's story, Database May Help Identify Veterans on the Edge, covers the latest brain teaser.

The story points to new research, published in the American Journal of Public Health, researchers at the Department of Veterans Affairs and the National Institutes of Health described a database they have created to identify veterans with a high likelihood of suicide, as the Times story (Fri, Jun 12, 2015, p A17) points out, " in much the same way consumer data is used to predict shopping habits."

The researchers set up a half a database that comprised variables associated somewhat with suicide cases between 2008 and 2011. They ran what I assume to be a machine learning algorithm on that. They then tried to predict what would happen with the remaining half of the database population. They then concluded that predictive modeling can identify high risk patients no identified on clinical grounds.

But predicting suicide is not like predicting likelihood one might buy a Metallica song, is it? How does the doctor sell the prognosis? "A machine told us you are likely to commit suicide."? Certainly some more delicate alternatives will evolve. A lot of the variables – prior suicide attempts, drug abuse – seem patent. Maybe doctors have just been more likely to guess on the side of life. If the Chinese government hacks the database, and sells the data, will the chance of suicide follow you like an albatross, and fulfill itself ?

Like so much in the big data game, the advance carries a quandary on its shoulder.

Related

http://www.nytimes.com/2015/06/12/us/database-may-help-identify-veterans-likely-to-commit-suicide.html

http://ajph.aphapublications.org/doi/pdf/10.2105/AJPH.2015.302737

http://ajph.aphapublications.org/doi/abs/10.2105/AJPH.2015.302737

The story points to new research, published in the American Journal of Public Health, researchers at the Department of Veterans Affairs and the National Institutes of Health described a database they have created to identify veterans with a high likelihood of suicide, as the Times story (Fri, Jun 12, 2015, p A17) points out, " in much the same way consumer data is used to predict shopping habits."

The researchers set up a half a database that comprised variables associated somewhat with suicide cases between 2008 and 2011. They ran what I assume to be a machine learning algorithm on that. They then tried to predict what would happen with the remaining half of the database population. They then concluded that predictive modeling can identify high risk patients no identified on clinical grounds.

But predicting suicide is not like predicting likelihood one might buy a Metallica song, is it? How does the doctor sell the prognosis? "A machine told us you are likely to commit suicide."? Certainly some more delicate alternatives will evolve. A lot of the variables – prior suicide attempts, drug abuse – seem patent. Maybe doctors have just been more likely to guess on the side of life. If the Chinese government hacks the database, and sells the data, will the chance of suicide follow you like an albatross, and fulfill itself ?

Like so much in the big data game, the advance carries a quandary on its shoulder.

Related

http://www.nytimes.com/2015/06/12/us/database-may-help-identify-veterans-likely-to-commit-suicide.html

http://ajph.aphapublications.org/doi/pdf/10.2105/AJPH.2015.302737

http://ajph.aphapublications.org/doi/abs/10.2105/AJPH.2015.302737

Saturday, June 13, 2015

New and notable Week of Jun 8

MongoDB enters world of swappable data storage engines http://t.co/dI97fPgFDq #mongodbworld

— Jack Vaughan at TT (@JackVaughanatTT) June 9, 2015

"Events on the Internet always leave digital foot prints. "– G.Moore #HadoopSummit (there's got to be a log file somewhere.

— Jack Vaughan at TT (@JackVaughanatTT) June 11, 2015

IPython Notebook as a Unified Data Science Interface for Hadoop by @hadoopsummit #apachehadoop http://t.co/IVsP5ZdECW via @SlideShare

— Jack Vaughan at TT (@JackVaughanatTT) June 11, 2015

Driver Behavior Predictions Application on Zeppelin #hadoopsummit pic.twitter.com/8ggHlz8mZk

— Toru Shimogaki (@shimtoru) June 9, 2015

The Mesosphere Datacenter Operating System is now generally available https://t.co/bkrKbWBKPf #mesosphere

— Jack Vaughan at TT (@JackVaughanatTT) June 9, 2015

Tuesday, May 26, 2015

Molecular sugar simulations on Gene/Q

Researchers working with an IBM supercomputer have been able to model the structure and dynamics of cellulose at the molecular level. It is seen as a step toward better understanding of cellulose biosynthesis and how plant cell walls assemble and function. Cellulose represents one of the most abundant organic compounds on earth with an estimated 180 billion tonnes produced by plants each year, according to an IBM statement.

The research shows that there are between 18 and 24 chains present within the cellulose structure of an elementary microfibril, much less than the 36 chains that had previously been assumed.

To download the research paper visit: http://www.plantphysiol.org/

To find out more about the Australian Research Council Centre of Excellence in Plant Cell Walls visit: http://www.plantcellwalls.org.au/

http://www-03.ibm.com/press/us/en/pressrelease/46965.wss

Using the IBM Blue Gene/Q supercomputer at VLSCI known as Avoca, scientists were able to perform the quadrillions of calculations required to model the motions of cellulose atoms.

The research shows that there are between 18 and 24 chains present within the cellulose structure of an elementary microfibril, much less than the 36 chains that had previously been assumed.

To download the research paper visit: http://www.plantphysiol.org/

To find out more about the Australian Research Council Centre of Excellence in Plant Cell Walls visit: http://www.plantcellwalls.org.au/

http://www-03.ibm.com/press/us/en/pressrelease/46965.wss

Monday, May 25, 2015

Data Journalism Hackaton

Took part in NE Sci Writers Assn Data Journalism Hackaton at MIT's Media Lab in April. iRobot Inc HQ! We tried to visualize a data story on California water crisis.

Tool was iPython notebook. [I got 1+1= to work!] [How cool is this?!] Came up short but learned a lot about manipulating data along the way. My colleagues were par excellance. Greatest fun I know is to be part of a team that is firing on all cylinders. Gee it looked nice outside, tho. Playing hooky on part 2! - Jack Vaughan

code-workshop.neswonline.com || CartoDB || geojson.org

ipython.org/notebook.html || more to come

Tool was iPython notebook. [I got 1+1= to work!] [How cool is this?!] Came up short but learned a lot about manipulating data along the way. My colleagues were par excellance. Greatest fun I know is to be part of a team that is firing on all cylinders. Gee it looked nice outside, tho. Playing hooky on part 2! - Jack Vaughan

code-workshop.neswonline.com || CartoDB || geojson.org

ipython.org/notebook.html || more to come

Subscribe to:

Posts (Atom)